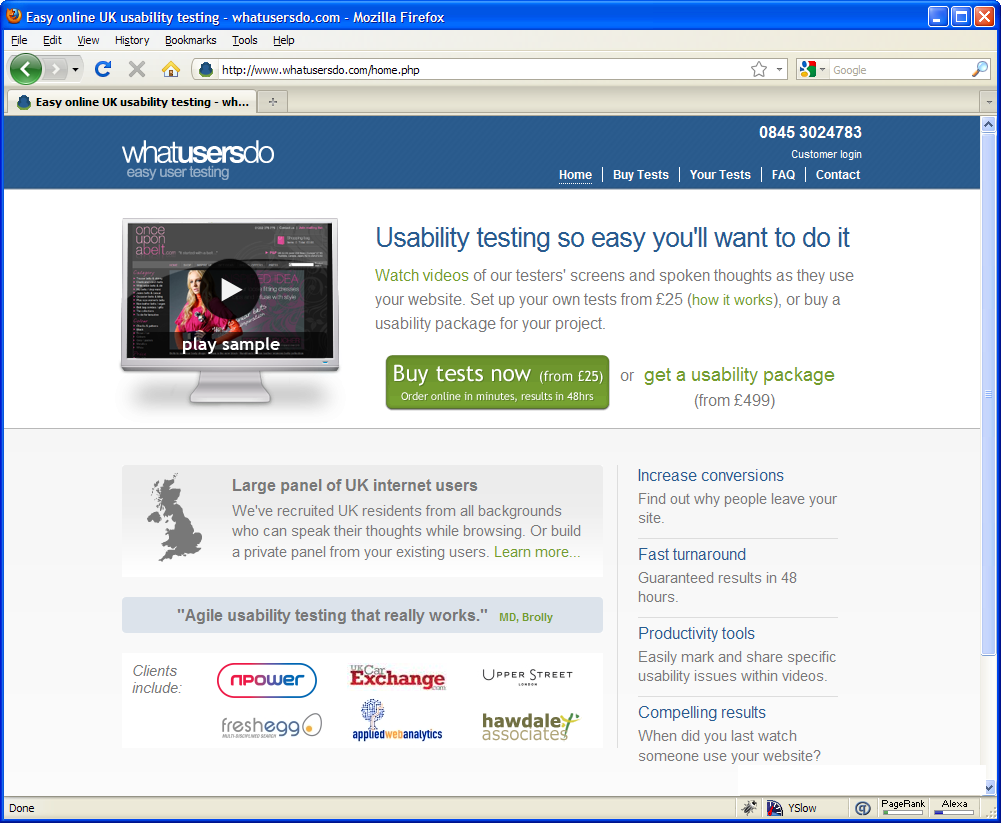

WhatUsersDo.com is a UK-based remote, unmoderated, qualitative usability testing platform, somewhat similar to usertesting.com. You pay £25-£30 per participant, and for each one you get back a 20 minute screen recording with audio of them thinking aloud during the tasks.

I’ve been aware of WhatUsersDo for a while now, but I have to admit, I’ve always shied away from using it. I’ve already got a healthy and well established research programme at Madgex – do I really need to use a new web app?

Looking at it from afar, WhatUsersDo seems a bit of a strange beast. Normally I’d carry out qualitative, moderated user research (in which the researcher gets to interview the participants), or quantitative, unmoderated research (Analytics, AB Testing, Surveys, etc). WhatUsersDo is an qualitative, unmoderated tool. Since it’s qualitative, you don’t get aggregated data – so you can’t quickly come to conclusions about the entire data set; and since it’s unmoderated, you don’t get to interview the participants – so you can’t keep them on track and drill into interesting topics as needed. To me, this isn’t an ideal combination.

Another thing that put me off is the fact that with the standard WhatUsersDo offering, your participants are provided from a pre-established panel, and you know very little about them. There’s quite a few new web apps offering panel-based testing nowadays, so let me say this for the record: panels are not good unless you create and control them yourself. An experienced professional researcher will find it almost unthinkable to base any redesign, however small, on feedback from a bunch of unknown people. You should always aim to carry out research on your real target users – people who in real life would actually use your product, for whom the minutiae of your design decisions have real world implications. If you test your site on random, unknown people, at best all you get is insights as to whether a generic human is able to complete tasks without facing insurmountable usability problems.

However, now I’ve seen WhatUsersDo in action, I’ve realised that just because it’s not for me, this doesn’t mean it’s not a useful tool for other people. It’s a good value entry-level tool. If you’re new to user research, and you want a cheap and easy way to get your toe in the water, then Whatusersdo is ideal for you. On the other hand, if you’re an established researcher then you’ll probably come to the same conclusions as me. Perhaps in coming months this will change, as they do have a new “recruit your own users” feature coming out soon.

In order to give it a fair evaluation, I found a volunteer who seemed to be well suited to WhatUsersDo – Ixxy, a brighton-based web development agency run by a friend of mine. Like many small agencies, they are technically capable but they don’t have in-house User Experience researcher.

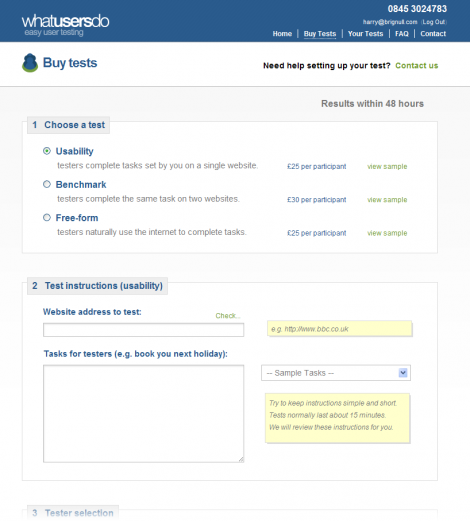

Setting up the study took about 10 minutes. We filled in one form and wrote the wording for a single task (shown below). This was incredibly easy.

Setting up the whatusersdo study

Having set up the study, all we had to do is sit-back and wait. A few days later, the test videos were waiting for us online. As you can see below, there was only one metric for each user: a satisfaction score marked out of 100.

Out of the five videos we got back, a couple of them were duds. The participants either rambled on, or didn’t take the task very seriously (e.g. in a holiday booking task, one participant booked the first property she found on the first dates she chose, and didn’t blink at the price – totally unlike real world holiday booking behaviour). However, 3/5 of the videos revealed useful, actionable findings, and this was enough to made it seem worthwhile (£25 per person is, after all, very cheap).

There was one feature in particular that the tests showed to be problematic – a rather complex calendar view that users tended to get confused by. It was one of those features that was designed to the clients specification, and the developers always had reservations about it. For them, the video footage was great news, as it gave them exactly the evidence they needed to convince their client that the feature needed reworking.

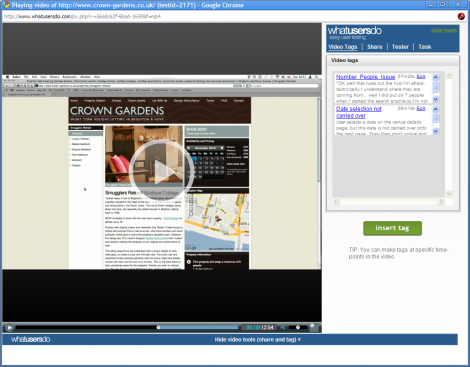

The WhatUsersDo video player offers a nice tagging tool (shown below), so you can add a text notewhenever an interesting event occurs. This becomes a hyperlink and you can use it to jump to that point in the video. I expect this would be very useful for team-based analysis where you each pick a video to analyse, and then later get into a group and rapidly go through the video highlights.

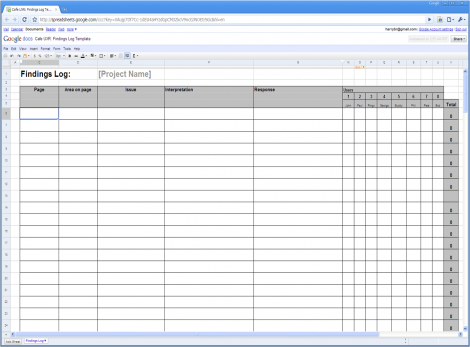

The tagging tool doesn’t give you quite enough functionality to manage the analysis of the video observations. This isn’t a big deal – you can create your own findings log very easily using something like google docs, shown below (Template available here).

A very simple findings log template in Google Docs

Conclusions:

If you’re in the UK and you’ve never done any usability testing before, then WhatUsersDo is probably worth trying – it’s so cheap that even if it only reveals two or three usability issues, it’ll be worthwhile. What’s more, it’ll give you a taste for UX research, and that in itself is invaluable. If you’re an experienced researcher in a company that has a regular research budget, then it’s possible you’ll find it a bit low-end for your needs. It’ll be interesting to find out more about their “recruit your own users” feature when it comes out, but personally, as a veteran researcher, I’d always prefer to carry out a live interview than watch a pre-recorded video.

Disclosure: WhatUsersDo is an advertiser on this site and gave us free usage of the platform for the purpose of the review.

Harry – thank you for a very balanced review.

A small thing to add – we’ve recently started sending custom screeners for those clients who want better profile matching of panelists.

Email support@whatusersdo.com to find out more.

I find your last paragraph’s assertion that the choice between a WhatUsersDo/usertesting.com versus moderated testing is not just an either or.

A company that I work with has repeatedly executed research plans that blend both approaches together throughout a project life cycle:

* Early: usertesting.com

* Early, again: usertesting.com

* Next: Moderated usability test

* Another: usertesting.com

* Multiple rounds (various international versions with language-specific participants): Moderated usability tests

* Final, for confirmation of a few details: usertesting.com

* Woops, another final confirmation: usertesting.com

I would agree with Nathan. Different types of test can be applied to different stages of a given project.

Although maybe one would ideally like to perform a moderated session at all stages of a project it may be acceptable to use a remote test if one is just trying to gain some quick insights or test out relatively minor changes to already tested interactions.

@Nathan – my assertion is that the standard WhatUsersDo offering is an entry level tool – mainly because they use a predetermined panel of testers who you know little about. Are they your target users? Do they care about the problem your site tries to deal with? You just don’t know the answer.

As such, you can only hope to uncover baseline usability issues – “Can a human carry out task X without confusion”. Don’t get me wrong, these insights are very useful – it’s vastly better to carry out this kind of research than none at all!

However, once you’ve got these insights under your belt, your research questions will mature. Your questions go from “Are key tasks completable by humans in general?” to “Does our webapp cater to the needs, goals and expectations of our target user group / user community?”. Remember, usable is not the same as useful. It’s entirely possible to create a webapp that is very easy to use, yet fulfils no real needs in target user group. You’re never going to find out if your app is useful by testing it on random people outside your target user group.

As Lee from WhatUserDo pointed out in the first comment, they are now offering the ability to create your own panel, and to screen testers from their predefined panel. This sounds like a good step forward to me.

@Nathan – yes, it’s good to use different types of research during a product life-cycle, for sure! However, consider this. With a tool like whatusersdo or usertesting.com (remote, qualitative, unmoderated) users carry out a task for 20 minutes remotely. No measurements are taken, a screen recording is simply captured. Afterwards you have to watch that video. You are going to spend 20 minutes one way or another. If you were to use a tool like Webex, you could take the exact same amount of time but run the session as an interview (remote qualitative, moderated). This means you can steer users away from rambling on about irrelevant stuff, and you can question them when something interesting happens. Obviously this is not for everyone, but the benefits are clear to see.

It’s definitely interesting to be able to hear users think out loud while performing tasks, but it seems like I would still have to sit through all the videos to gain my insights, so time-wise it doesn’t seem much of an improvement over being there with the participant.

Zephyr – yes, you do need to watch the videos to gain insight. But there is a massive time saving over being there with the participant and that’s the time it takes to setup.

With our service you can setup tests in minutes without the hassle of recruitment which can take a lot of time (and energy).

How do you recruit participants? How long does it take?